Business Intelligence In One Weekend

Version 1.2.1, 2019-02-04

Copyright 2019-2024 Iyad Horani. All rights reserved.

Overview

How do you choose a Business Intelligence (BI) solution for your company? Do you search for reviews about a particular tool? Do you perform a Tableau vs Power BI search? Aside from the feature lists and rating, how do you know that Tableau is the better solution for your business?

If someone is familiar with BI technologies, then they'd first understand the business type, the intended outcome of the BI tool, and the current technology stack used by the business. Those questions make figuring out the right BI solution an easy task, because this person posses the required knowledge of the BI technologies core components, features and usability features. If you're not that person, then I would a guess that those "vs" searches didn't help out in giving you confidence that BI tool X is the right choice for your business.

If you're not that person who's familiar with BI technologies, but you have the interest and desire to start using Business Intelligence tools to add to your business, then this guide for you. We wrote this guide to be as complete as possible to answer all your questions about BI, be it price, salary expectations for Data Analyst, and even features.

However, rather than listing different tools and features, we took a different approach. This guide is educational, and it's meant to take you from zero to hero in understanding everything you need to know about BI including:

what is a BI technology,

how do you use those features,

how those features differ from one solution to another,

how will it affect your business,

what else do you need to purchase along with a BI solution,

Who else do you need to hire, or do you need to hire anyone?

Which solution can you pick up today and know that it gives your business the results you need today?

And many more answers.

This guide takes you on a journey of becoming knowledgeable about BI. We're not aiming to make you an expert and implement one your self, unfortunately getting you to this point requires 20x more the size of this guide. Instead, this knowledge helps you form a more educated and informed decision whether your business needs a BI solution today, what is involved in deciding to implement a BI solution, and more importantly, how much is it all going to cost your business and will it have the required ROI.

There is a lot to be excited about BI and the promises a BI delivers to a business. We know this because Forbes states that insights-driven companies are on track to earn $1.8 trillion by 2021. Forbes We also know that BI can be notoriously complex and expensive to implement, often taking six months or more. Which associate BI with fear for many companies. Should a company invest a considerable sum on implementation and new hire, wait for six months before seeing a positive effect on the company's bottom line? We understand the dilemma and the risks businesses faces when they choose to adopt BI without knowledge of what BI involves. You can mitigate this risk by equipping yourself with just enough knowledge and framework of a proper BI implementation.

The ideas, concepts and principles in this guide are logical and straightforward to understand and implement. Technical concepts are built upon chapter by chapter and are introduced solely because of their importance to the overall implementation solution.

Today, there's a BI tool for every business size and challenge. Tools that are smarter, and solutions that are accessible than it used to be 15 years ago. The BI market which is expected to grow to a $29.48 billion by 2022. Reuters

For some this means more "vs" searches to perform, for those reading this guide, they'll know if a BI tool is the right one for their business just by skimming through the features list.

We hope you enjoy reading this guide as much as it did for us writing it. We're confident we'll answer all your all your questions about Business Intelligence.

TABLE OF CONTENTS

- Ch.1

What is Business Intelligence - Ch.2

Business Intelligence Functions - Ch2.1 Reports and Widgets

- Ch2.2 Dashboards and Data Walls

- Ch2.3 Drillthrough

- Ch2.4 Alerts and Scheduled Reports

- Ch2.5 Analytics

- Ch2.6 Augmented Analytics

- Ch.3

Practical BI Use Cases - Ch3.1 Performance Marketing

- Ch3.2 Process Optimisations

- Ch.4

Business Intelligence Components - Ch4.1 Data Sources

- Ch4.2 ETL

- Ch4.3 Connectors

- Ch4.4 Data Warehouse

- Ch4.5 Data Preparation

- Ch.5

Business Intelligence Skills - Ch5.1 Development

- Ch5.2 Analytics

- Ch.6

Business Intelligence Helpers - Ch6.1 ETL Helpers

- Ch6.2 Data Preparation Helpers

- Ch6.2.1 Paxata

- Ch6.2.2 Tableau Prep

- Ch.7

Business Intelligence Tools - Ch7.1 Self-Service BI vs Traditional

- Ch7.2 DataBox

- Ch7.3 Tableau

- Ch7.4 Microsoft Power BI

- Ch7.5 Infor(Birst)

- Ch7.6 Salesforce Einstein Analytics

- Ch7.7 Gartner MQ in Analytics and BI

- Ch.8

Conclusion

Ch1. What is Business Intelligence

The term Business Intelligence (BI) refers to technologies, applications and practices for the collection, integration, analysis, and presentation of business information. This chapter will introduce the BI technologies, the types of intelligence a BI provides businesses and the type of questions a company can answer through a BI solution.

Business Intelligence (BI) is a technology-driven process that leverages data a company already have to convert knowledge into informed actions. The process analyses a company data and presents actionable information to help executives, managers, and other business end users make informed decisions and measure the health of the business.

BI can be used by companies to support a wide range of business decisions ranging from operational to strategic. Fundamental operating choices include product positioning or pricing. Strategic business decisions involve priorities, goals, and directions at the broadest level. In all cases, BI is most effective when it combines data derived from the market in which a company operates (external data) with data from company sources internal to the business such as financial and operations data (internal data). When combined, external and internal data can provide a complete picture which, in effect, creates an “intelligence” that cannot derive from any single set of data.

The ability to combine data source is what sets BI apart from regular reporting and analysis tools found in modern cloud applications. One example of this is Google Analytics (GA). GA provides a company with a comprehensive activity view of all website visitors. GA also allows linking to other Google products inside of GA (AdSense, Google Ads, Google Search Console, and even Google Play). However popular, Google still offers Data Studio. A stand-alone BI tool that imports data from GA, Google products and other external sources. So what's the added value of Data Studio over GA?

Let's say we wanted to know the different channels responsible for the sale of 50 Databox online course this month. In GA we could identify that 50% of the traffic originated from an organic source. Organic means a search made on Google and the prospect opted to click on our website. Knowing the search terms responsible for the conversion would allow us to generate content that targets those keywords, which eventually have the potential to increase our conversion rate from organic search and decrease our ad spend. However, GA does not tell us which organic terms are responsible for the conversion. At this point, we can check the acquisition tab and look through the search console panel to analyse the organic search traffic. The report gives us a list of all search terms that resulted in having IRONIC3D appear as a search result, and which terms resulted in clicks to our website. Useful as it is, there is still a wide gap between organic traffic and knowing which terms were responsible for the conversions. This knowledge is the difference between having several fishing nets in multiple locations versus a single fishing net in a specific bay. With Google Data Studio we can import the raw data from both platforms and perform analysis to determine to a certain degree which search terms were responsible for the conversions.

So what are some examples of intelligence a company can have with BI tools:

Suppose you run an online store selling designer shoes. Your main channels for acquiring sales are through Google AdWords, Facebook, Instagram, Newsletters and organic search. Each channel is a separate application with its analytics interface, so you can check from newsletter application how many people clicked on your email link, your accounting software tells you who bought the items, which at this should be enough and roll out that the Newsletter as the reason for the purchase. However, looking at data from silo sources doesn’t give you the purchase lifecycle, and more importantly, what was the last conversion point responsible for the purchase:

Did person X click the link and went straight to purchase the shoes?

Alternatively, did person X click on the email link, decided to take a couple of days to think about it, saw your advertising on Instagram, read the positive comments and then decided to purchase?

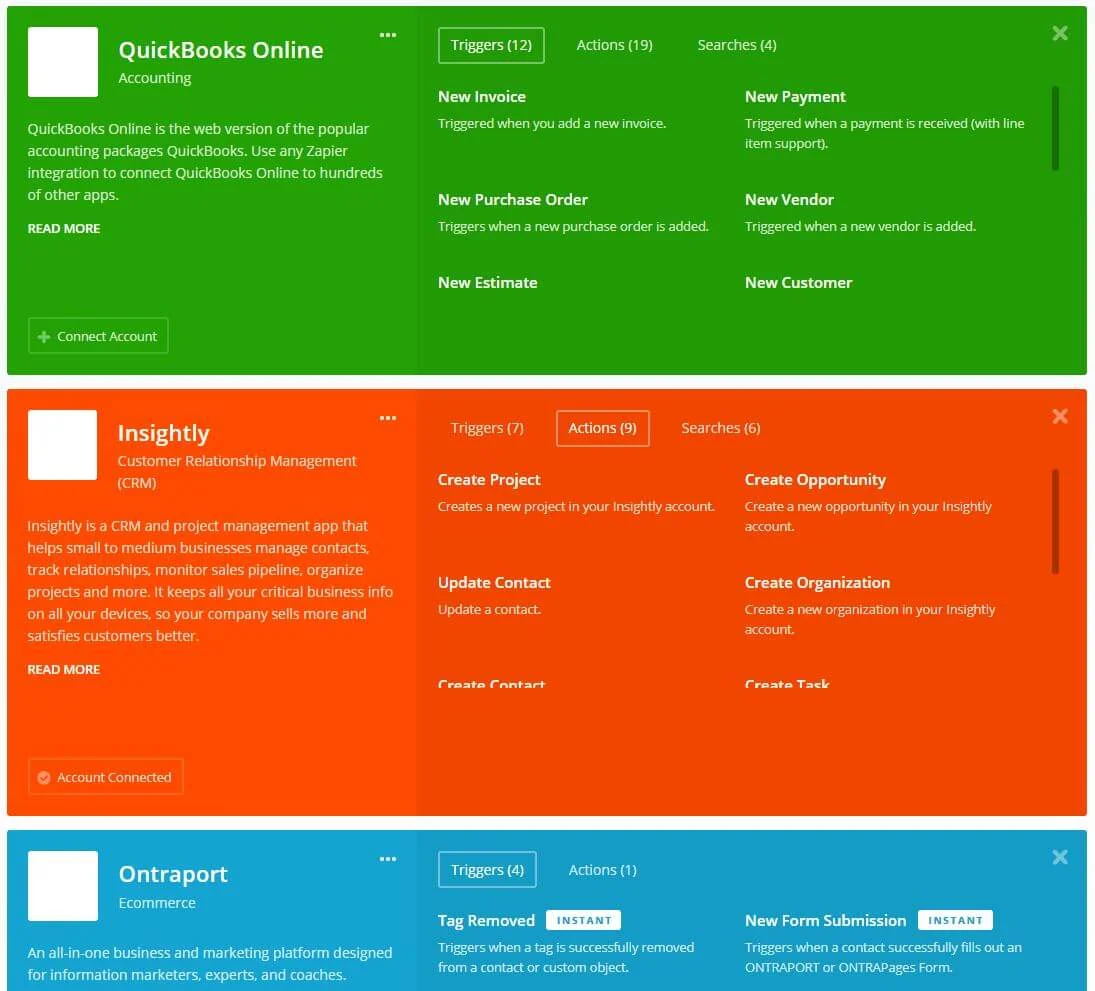

Intelligence can also happen within a single application, Let’s take Insightly as an example. Insightly is a business CRM with many sophisticated capabilities. One feature in particular that we find extremely useful at IRONIC3D is the relationships feature. Relationships allow Insightly to links contacts together (directional linking, both forward and reverse relations), as well as linking companies together. So you can define a new relationship of a parent company, and it's subsidiary companies, or a coach and a client relationship, parent and child relationship, marital relationships, friendships as well as ex-employments. These types of relationships are beneficial if your business depends on cultivating relationships with your clients. Unfortunately, there isn’t an easy way to visualise all of your contacts and all the associated relationships, in fact, you’ll have to check each contact individually to see what type of a relationship that contact has. You cannot even list and segment those relationships to see who’s who, for example, you cannot list all influencers or the most active influencer. Unless Insightly builds this interface for you, there is no way to get this type of reports. (Note: Insightly introduced a visual map for contacts in its recent update. This feature is for Enterprise subscribers only). With BI however you can extend that reporting capability and build as many reporting views for relationships as you deem fit. You don’t have to wait for the vendor to update their software, nor you need to hire a developer to extend the system.

BI can also be used to extend and implement your business roles. A typical example of this is commission calculation. If your product invoicing depends on multiple delivery dates, then most probably your finance team is performing that calculation using Excel or Google Sheets, however, performing commission percentage is easily accomplished with a BI tool, it's also happening in real-time.

Lastly, you can BI as an early notification or an alarm system for the most critical business metrics. Not only that, but it can also tell you where exactly you need to look. An example of this is a services sales. Sales is a result of various marketing and sales steps, so if you’re selling a service and have a specific target for each month, it’s not very useful to know that you won't be meeting this months sales target. On its own, this doesn’t tell you what needs to happen to meet the sales target. With a BI tool, you can break the marketing and sales process and assign targets for each step, and assign a target, or a threshold, for each step. Thus, if one of the steps is below the threshold, you’ll be notified about that step.

Simply put, BI is required to operate a business. It provides an interface that empowers decision makers to discover answers to specific questions that affect business performance.

With BI we typically examine data to look for three main areas of interest:

1. Aggregations, looking at grand totals, for example. How much online courses sold last year? Beyond just simple totals is the ability to slice and dice, as it is called, breaking down totals into smaller pieces. How much online courses were sold by a state? For each state, how much was sold by subject? Business intelligence tools provide the ability to break these totals down into smaller pieces for comparison and analytics purposes.

2. Trends, knowing the current totals are significant but just as important is knowing how those totals change over time. Knowing these changes can help you decide how well a product is doing. Had the sales for a specific demographic of online courses increased or decreased over the last year? Knowing this can help you decide whether to place a course on sale, increase the price, or take it off the market altogether.

3. Correlations, tools such as data mining and machine learning are used to find correlations that might not be obvious. A favourite story among BI enthusiasts is the correlations uncovered by a major store chain between diapers and beer. When data mining was applied, they found a strong link between the sales of the two. When beer sells tended to peak, so made the diaper sales. Salespeople surmised that individuals on their way home to pick up diapers would also grab some beer. As a result, the diaper sections and beer sections moved close to each other further increase sales, all because of the power of correlations.

A BI tool allows you to explore those areas of interest in the form of questions, you ask your data questions, and your data answers through a visual response. The type of questions asked on data can be categorised into How, Why, and What.

How questions the state of the business. At the current time or in the past.

How are we doing on project deliveries and are we on time?

How did sales person x perform last month?

Why questions are to find the reason behind a particular business result. A result that happened already. What Why tries to find is a relationship between data that causes changes.

Why are current sales down this month?

Why is product x not performing in this period compared to the previous period?

What questions focuses mostly on the future of the company, past time, but it’s predominantly used for future time states.

What are our current profit margins look?

What will our profit margin look like in 3 months time?

What did we deliver this past month?

A BI tool allows for grouping of those question into one or more views, which provides continuous real-time answers to key-business metric, and accessible to all business parts. The grouping ensures teams are always aware of the company immediate, short or long-term goals, as well the drivers required to reach those goals.

Finding a single BI application that answers all questions of How, Why and What of business are uncommon. Most BI applications are specialised and deal mainly with the current business state and past state. The future state requires additional specialised software that either work alone, or side-by-side with a given BI application.

As such, the first differentiation factor between BI application is how versatile a BI application can deal with current, past and future states of business. There are BI applications that are specific to aggregation, others allow for trend, and a few allow for correlations as well. Finding the right BI tool depends on the business area of interests a BI tool needs to examine, as well as the type of questions the business needs to answer.

Is your business interested in a BI application that wants answers to the current state, past state or future state?

Is your business interest in data is to have answers about the Why, the How, or the What?

Where do these questions fall into, are they in the immediate business needs, short-term needs, or a strategy?

Which one is more important for you now?

Also, BI technologies provide historical, current and predictive views of business operations. Typical functions of BI technologies include:

Reporting

Analytics

Data mining

Process mining

Complex event processing

Business performance management

Benchmarking

Predictive analytics.

Having a clear understanding of where your business needs lies and the type of questions your company is looking for is the first step to narrowing down the best BI solution for your company.

BI technologies are verstile. providing historical, current and predictive views of business operations. Typical functions of BI technologies include:

Reporting

Analytics

Data mining

Process mining

Complex event processing

Business performance management

Benchmarking

Predictive analytics.

Having a clear understanding of where your business needs are, and the type of questions your company is looking for is the first step to narrowing down the best BI solution for your company.

Ch2. Business Intelligence Functions

BI functions are the first thing people read when researching a BI tool, so it makes sense for us to start exploring them. This chapter will discuss each function and explain what they are and how are they used in real-world scenarios. We'll also explore the weight each function holds on the BI application pricing.

- Ch2.1 Reports and Widgets

- Ch2.2 Dashboards and Data Walls

- Ch2.3 Drillthrough

- Ch2.4 Alerts and Scheduled Reports

- Ch2.5 Analytics

- Ch2.6 Augmented Analytics

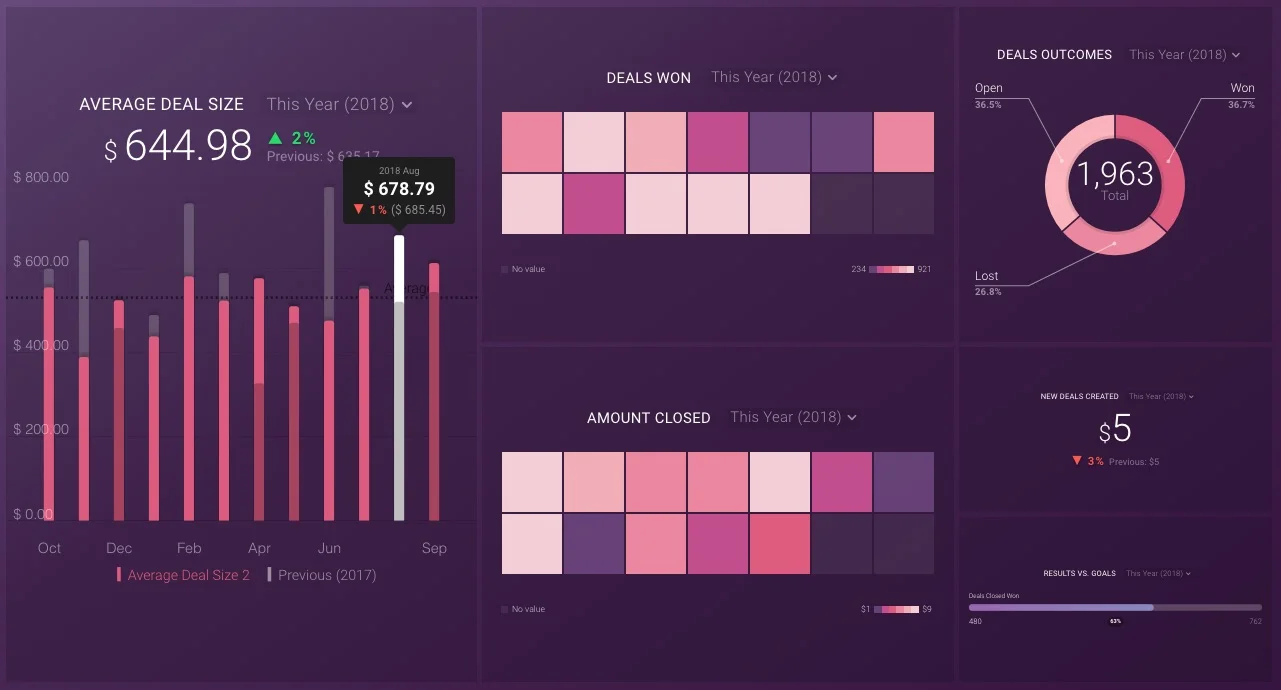

Reporting is the means of displaying data inside a BI tool in a visual manner. A report represents a view that is of interest for your business. In other words, it presents the results of a question. Typically a BI tool will have an arsenal of visuals to show the result in any number of ways.

Reports are sometimes called widgets or tiles, the naming between BI tools might differ, but the functionality is the same across BI tools. The only difference between each BI tool is the levels of customisation that are available to you.

On a basic level, a report should display the dimensions and measures along with a time series, as well enough customisation options to the look and feel of the visual (e.g. colours, header names, font, size). Advanced reports allow for layering of multiple data on the same visual for comparison, or even analytical data such as trend lines. Some specialised BI tool will also allow for a deeper level of customisation to the look and feel of the report (Tableau is one of those tools, it position itself as a Visualisation Tool rather than a BI tool because of the many customisations it offers)

We've mentioned that a report displays data which include dimensions, measures and time-series, let's give a brief definition of what those means.

Dimension is an attribute that categorises your data. So if you’re looking for sales by country, the country is a dimension in this case. Dimensions are also a way to navigate the data to get a different view. For example, you might want to see product performance over time, then get a different point of view and see regional performance over time. These categories provide perspectives which are called dimensions.

So dimensions can be anything which can consistently categorise your data, and provide you with a better point of view.

A measure is a numerical value that quantifies the data set you are digging into to understand better. They also provide meaning to your dimensions. So in the example of sales by regions, the sale value is a measure.

A collection of measures of the same type can be summed, averaged, floored or apply an arithmetic operator to aggregate them.

Metric is not the same as a measure. A measure is a number derived from taking a measurement. A metric, however, is a calculation between two or more measures.

Calculated Metrics are user-defined metrics that are computed from existing metrics and drive more relevant analyses and enable greater actionability without leaving the product. For example, profit is a calculated metric that is calculated from the Revenue metric, Operating costs metric and any other liabilities to the business.

Time-Series is how you analyse your measures by date. The usual time series is today, yesterday, this week, last week, week to date, this month, last month, month to date, this financial year, last fiscal year, this quarter, etc.

Time series is an especially important feature, often overlooked. Depending on where your company is, a proper BI tool should allow creating custom time series. For instance, if your business operates in Australia, you would want to measure your revenue based on AU financial year, which starts from 1st of July till the 30th of June. If a BI tool doesn't allow for custom time series, then your fiscal year will default to 1st of Jan till the 31st of Dec.

Reports can either be static or dynamic. With static reports, the resulting data displayed doesn't allow the end user to change its content. So one cannot perform filtering on the data to show different time series or include additional data in the form of check-boxes or drop downs menus. Static reports are useful to generate a final report or daily, weekly, or monthly reports that are delivered to a person or a department by email.

Dynamics reports allow for a report to be filtered and show different results. An example of this would be to filter product sales by financial year, region and category.

All BI tools will provide a similar set of standard reports. Report widgets are eye candy and a huge selling point in the promotion of any BI tool. However useful and visually appealing they may be, reporting widgets don’t have a massive influence on the overall pricing of a BI tool. Some BI tools might include additional functionalities to the report widgets by the following three two points:

Can a report have visual filters presented alongside the report, in the form of the input area, drop-downs, check-boxes?

How customisable is a report? Does the BI tool give you access to a scripted interface to manipulate the underlying code behind a visual? Or can you write your custom visual?

Can the BI tool allow for the business or a 3rd party vendor to create custom widgets (e.g. Power BI enables downloading of custom widgets from an online marketplace)

Embedded filters are a usability feature that is of importance, as it’s directly related to workflow and how to make the final reports accessible and usable by the end user. So look beyond the pretty reports and instead check the degree of report interaction.

Extending the widget library with 3rd party widgets is useful however it should not be a deciding factor for choosing a BI tool. There reason all BI tools ship with the same standard reports is because those visuals are widely used, and are considered the standard way to represent data. If your reporting needs are particular, find the tool that will provide those reporting visual out of the box.

- The exception to this rule is the Geo Mapping BI tools (e.g. CARTO or ArcGIS from Esri). These tools are specific for displaying geolocation data, so the map is at the centre of the display, showing all reports on top of the geo-map.

Reports or Widgets do play a pivotal role in the final pricing of a BI tool, not on features, but instead, on the licensing model. In such cases, pricing is on the overall reports that that is available to a single license or account. More tiles will increase the monthly cost of the license cost.

A dashboard is a collection of reports, mostly similar, placed alongside one another on the same page. Dashboards main function is to tell a story at a glance.

Dashboards are another standard feature in BI and are comparable between applications, the difference in dashboards between BI tools is with the usage which can be any one of the following:

1) Multiple dashboards that you navigate from one to another like an image gallery, also known as a Data Wall.

2) One main dashboard that contains several reports, each report links to a Report View, the report view is a page with several related reports.

3) Drillthrough dashboards which allow for inter-linking and hierarchy between dashboards.

Those types of dashboards differ in usability as well. Data Wall dashboards can only filter the whole view with a date filter, so picking Today filters all reports on the dashboard to display today's data. Report Views, on the other hand, don't allow for the filter to be implemented on the main dashboard, while the report view itself can filter with selector common to the reports, be it a date filter, a name filter or a product filter. The Drillthrough dashboards are much more customisable since each view is a dashboard, with filters embedded in any dashboard.

Dashboard functionality ties directly to the type of BI tool, SSIB tends to offer Data Walls and Report Views, while traditional BI tools provide Drillthrough dashboards.

There are many design patterns for grouping and assembling dashboards in a BI tool. The most common of those is the Value Based Design.

Regardless of the BI type, there needs to be a logic in designing BI dashboards or report views, or else you risk having a highly nonfunctional BI dashboard that is of no use to anyone. To design a functional dashboard you need to choose a design pattern. The one we find most natural for a business is a Results-Based Design.

Results-Based Design

A results-based design assembles data from the bottom up, with the topmost level being the Key Results Indicators (KRI), one level below is the Key Performance Indicators (KPI). KPI’s are the drivers for the KRI’s. One level down is the Action Points responsible for each KPI, followed by the User Roles, and finally, the underlying data and decisions data.

Once the dimensions, measures, metrics assembled in a Value-Based Design map, we group the reports into several dashboards broken down in the following logic:

The Three Stages of a Results-Based Design

1- Display - Are we ok?

This dashboard is the first dashboard the business unit sees. It hosts all of the KRI’s and the Drivers to give the end user an overall health check on the business.

2- Diagnose - Why?

The Diagnosis pages enable heavy-duty analysis, usually assembled in 2 or more dashboards. Also, it’s where real analysis and diagnosis occurs. The goal of each diagnostic dashboard is to enable diagnosis of issues in a manner that leads to action.

The reports found within the diagnostic dashboards show the interaction of drivers, KRI's and action points with a with the use of filters.

Generally, Diagnosis pages are organised by either:

KRI Drivers (e.g. Receivables, Deal Size, Close Rate)

Key Action Points (e.g. Products, Channels, Locations)

3- Decide - What to do?

The decide dashboards are detail pages which enable users to take finite actions to improve KRI and KRI drivers. These dashboards are where most analysis ends up, with a list of specific items on which to take action as filtered by the analysis performed on the Diagnosis pages.

Dashboards functionality have a good impact on the BI application price because it determines the functionality and usage of the BI tool. To make it a point of differentiation between similar BI tool, vendors include Dashboard as part of the licensing model as following:

Number of Dashboards: How many dashboards are available for each account and price tier?

Security: Can you set permissions to assign specific dashboards to individuals or departments?

Embeds: Can a dashboard be inserted to a website or another platform?

An embedded live report from Microsoft Power BI

Dashboards are meant to give the user a complete picture for the health of the business at a glance. Simplicity and concise reports are critical to an excellent Dashboard design. Dashboard features such as embedded filters, drill through, security, and dashboard integrates onto a website are part of the business case, which needs researching at the beginning of the project.

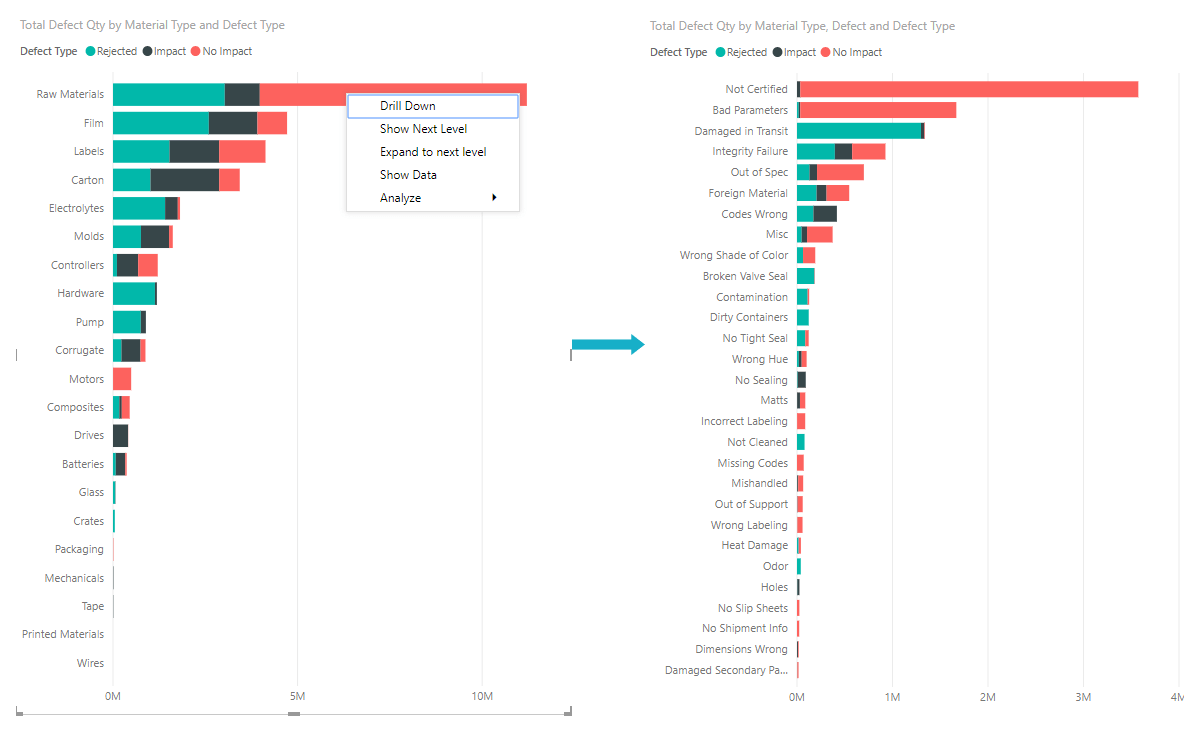

If the BI tool allows for the creation of hierarchies, then you setup drill through (or Drill-in) paths which allows you to drill down from one report to another to reveal additional details. For example, you might have a visualisation that looks at Olympic medal count by a hierarchy made up of sport, discipline, and event. By default, the visualisation would show medal count by sport (e.g. gymnastics, skiing, aquatics). However, because it has a hierarchy, selecting one of the visual elements (such as a bar, line, or bubble), would display an increasingly more-detailed picture. Select the aquatics element to see data for swimming, diving, and water polo. Select the diving element to see details for the springboard, platform, and synchronised diving events.

In some instances, Drill-ins can be a link between two separate reports that moves the user from one dashboard to another filtered dashboard specific to the selection made in the previous one.

Drill-ins are an analysis helper feature, as it simplifies the steps and time required to Drill-in to the source of why the data is showing the way it is. As such Drill-in and Drill-through are not available in many SSBI tools, especially the Data Walls BI tools, instead its included in advanced SSIB tolls and well as traditional BI tools.

When evaluating a BI tool get a firm understanding of how the final output is used in the business and by which department, and if drill-ins is a core requirement for the company.

There is an embarrassing about BI regardless of the solution you end up choosing for your company, and that is despite your best efforts, most of the people in the company end up not using the BI application, either entirely or occasionally.

There is a human psychology aspect of how people process information differently from one another, from left brain versus right brain, creative vs analytical, visual versus auditory, convergent versus divergent thinking. These factors play an essential role in the implementation of a BI tool within a business and need to be addressed and accounted for early in the process. The truth of the matter is, BI is implemented by analytical people, which makes some BI implementations overwhelming challenging to discern on a daily basis beyond the people who designed it.

Giving the keys to the BI tool implementations to every department head or team leader to design their measures, reports and dashboards is not the solution. In one instance this was tested, and the manager ended up creating several dashboards, each dashboard having multiple reports with 60+ columns in each. No one in the team was able to read it or use it, not even the manager who created it used it.

Fortunately, this is a constant problem every BI vendor faces. So BI vendors came up with a myriad of clever solutions to increase the retention rate of BI usages in a company. Here are the most popular solutions:

1. Scheduled Reports

Scheduled reports are a summarised report. These reports provides a view to a certain situation. Some example of scheduled reports are:

Fulfillment lists

Delayed projects

New signups

Quarterly pipeline activity

Weekly sales forecast

New collections this week

Scheduled reports are delivered by email that is set to send designated recipient at a certain period of the day.

Repetitive reports are better suited to be delivered automatically, rather than relying on the user to search for the data to act on it, the BI tool should deliver it automatically at certain periods of time based on when this data is required.

Scheduled reports are great for providing the right data at the right time for the right person.

2. Alerts

Alerts are reports or messages that are sent to a user or a department which are not time-dependent, instead, they are triggered (activated) on specific conditions that the analysts or user specifies. Alerts are great to pull your attention into a situation that requires immediate action or alerts you of a possible risk if the current path stays the same.

Some examples of alerts are:

Advertisement campaigns are under delivering

Over delivered campaigns (overspending)

Account deletions

Refund requests

Drivers (KPI) not reaching the intended target.

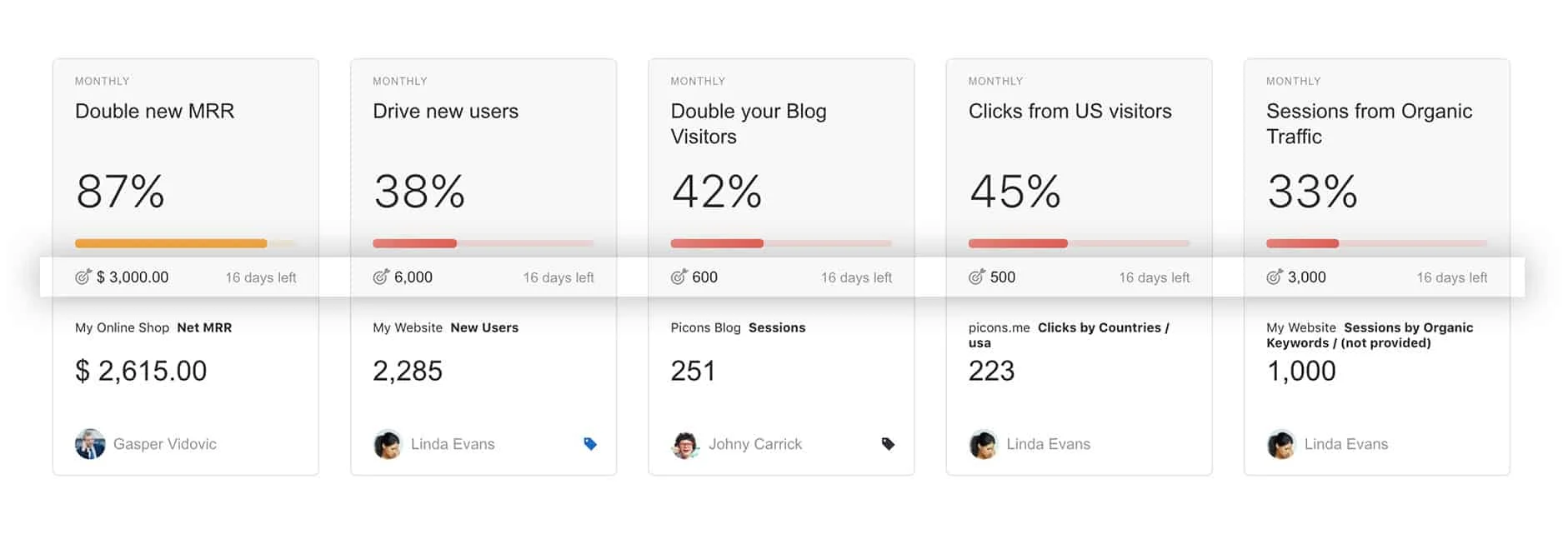

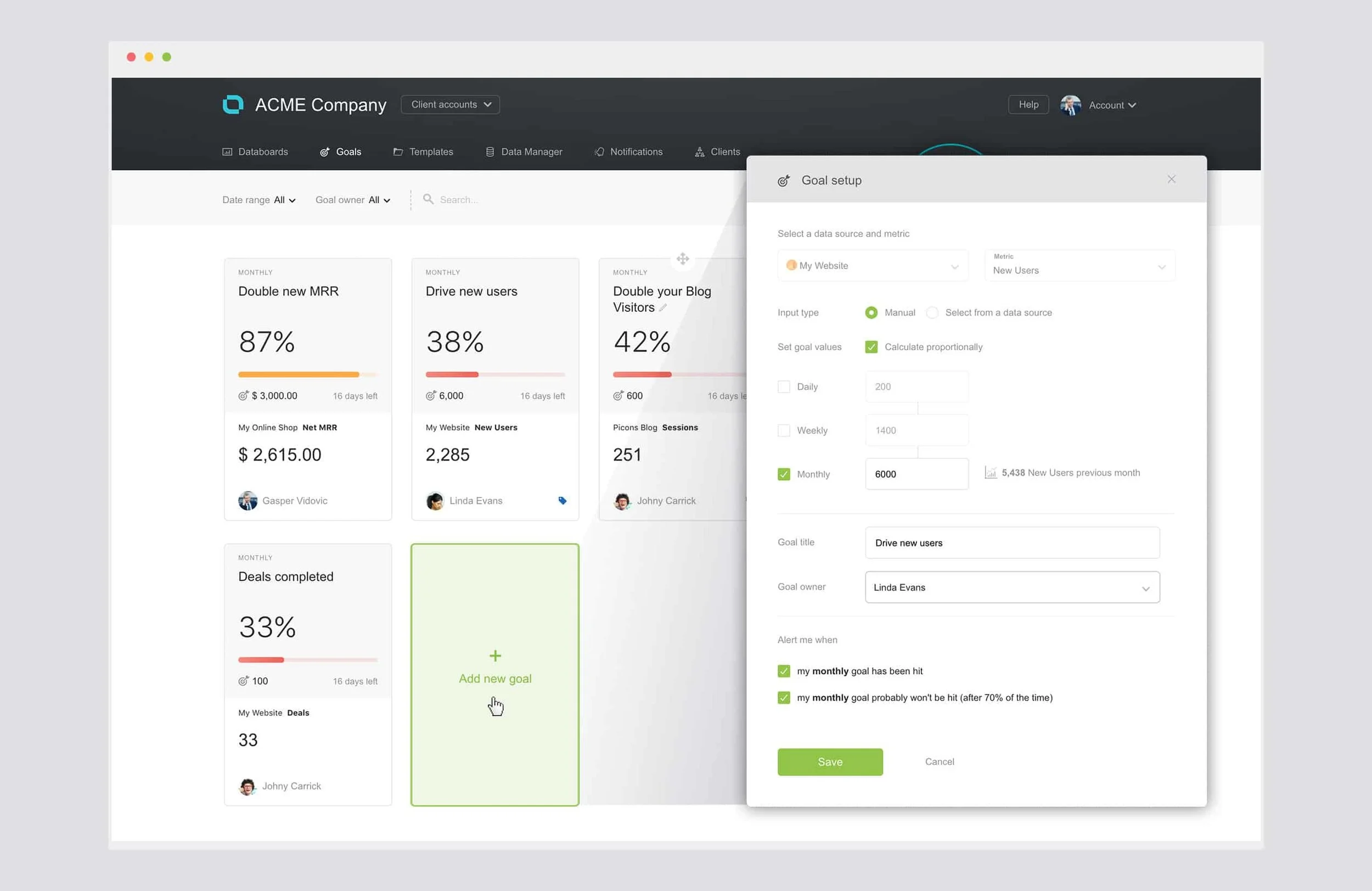

3. Goals and Scorecards

Goals are an advanced form of Alerts. Goals are present in a Performance BI tool.

Goals are set against any measure or metric required to reach an outcome in the business and are very useful in that they:

Provide feedback to individual user roles on what is essential to the business success at any given point in time.

Tells the team on which tasks they need to focus their effort on.

Provides them with the time span to gauge if they’ve reached the intended business goal before moving between tasks.

Managers or analysts can set goals for team members, without the need to communicate it in lengthy emails or meetings.

Scoreboards is another feature of a Performance BI tool. It allows you to select specific metrics that you deem important to you, from different sources, and have it delivered to you through push notifications and emails.

Scoreboards are a great way to get an overall health check at any point in time without the need to visit the BI tool and comb through the individual dashboards. You can specify a time and a day in which you’ll receive recurring notifications with those specific metrics,

4. Snapshots

Snapshots take a screenshot of a specific dashboard and deliver it to a specific person or shared online. Snapshots are great for those on the move and require delivery of a dashboard automatically.

Snapshots are used to send formatted a performance report to a client, doing so removes the burden of giving them access to your company BI tool.

Any combination of the above tools can be found in a single BI tool. They are a huge time savour and increase productivity and usage of the BI tool in a company. The inclusion of those tools doesn’t affect the final pricing of the BI tool. However, they affect the usages of the BI tool in your company.

A BI tool provides all the tools necessary to help a business in data collection, sharing and reporting to ensure better decision making. Having said, most people would think a BI solution allows for business or data analytics. While related, BI and business analytics are not the same.

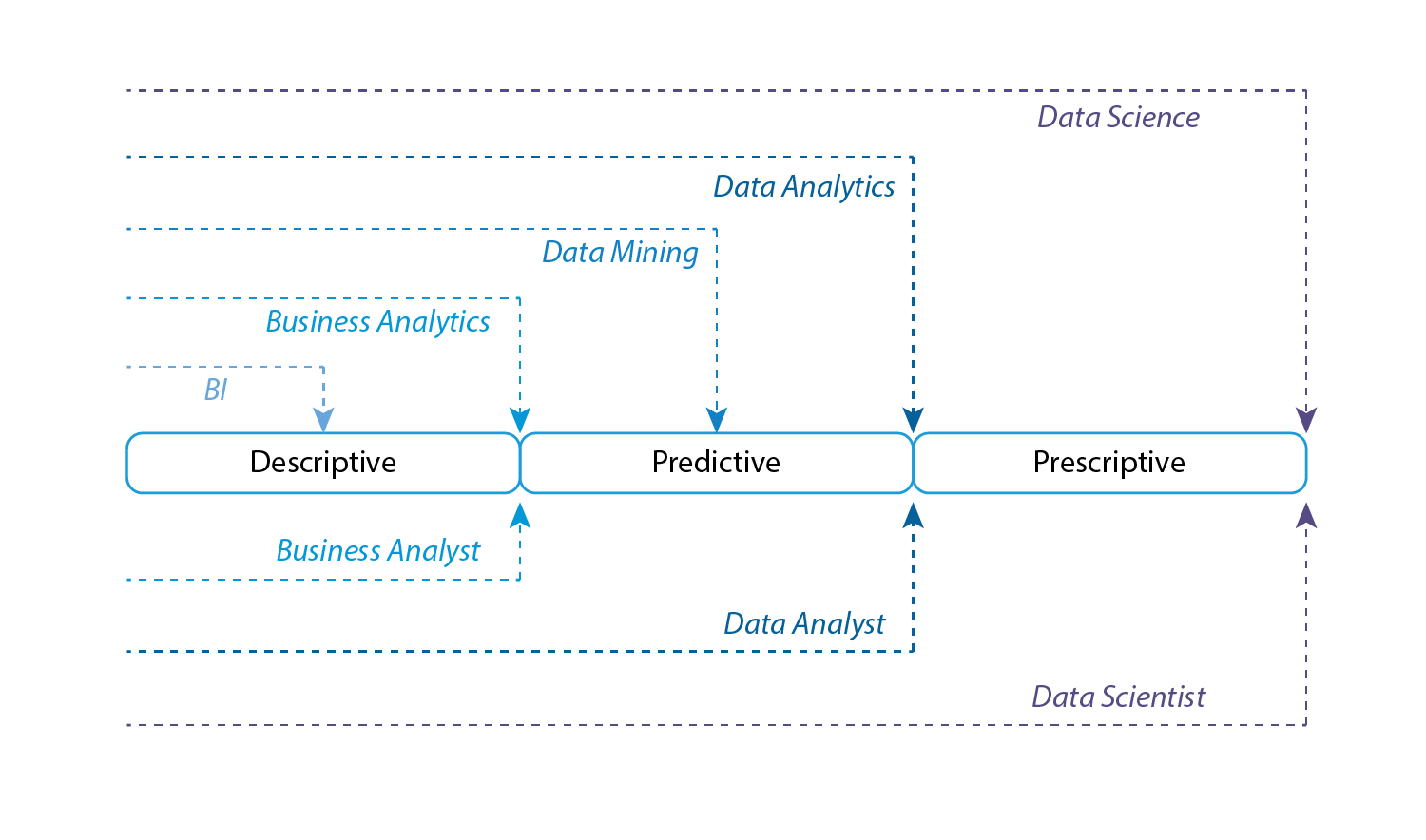

BI is the broadest category involving Data Analytics, Data Mining and Big Data. On its own BI refers to data-driven decision making with the help of aggregation, analysis and visualisation of data to strategise and manage business processes and policies. Traditionally, BI deals with analytics and reporting tools, which helps in determining trends using historical data.

Business Analytics, on the other hand, helps in determining future trends using data mining, predictive analytics, statistical analysis and others as well to drive innovation and success in business operations.

“Business Intelligence is required to operate the business while Business Analytics is required to transform the business.”

To understand the difference between BI and all disciplines of analytics we need to get familiar with the types of analytics we can perform on data. In the business question a BI tool can answer we explained that a BI tool provides answers to How, Why and What. we'll categorise these questions at a high level into three distinct types.

No one type of analytics is better than another, and in fact, they co-exist with and complement each other. For a business to have a holistic view of the market and how a company competes efficiently within that market requires a robust analytic environment

Those types are:

Descriptive Analytics, which use data aggregation and data mining to provide insight into the past and answer: “What has happened?”

Predictive Analytics, which use statistical models and forecasts techniques to understand the future and answer: “What could happen?”

Prescriptive Analytics, which use optimisation and simulation algorithms to advice on possible outcomes and answer: “What should we do?”

Business Intelligence is strictly descriptive, even more so, BI doesn’t cover all aspects of descriptive analytics. Because descriptive analytics is further broken down by the type of decisions required, those decisions are Exploratory, Descriptive and Causal.

Exploratory

Exploratory analytics is the first level of analytics done and usually done first. It gives you a broad understanding of what the underlying problem could be. The data collected for exploratory analysis can be from submissions forms, internet traffic, social comments, focus groups, internet communities.

Descriptive

Descriptive analytics tries to determine the frequency with which something occurs or the covariance between two variables. That data used for descriptive analytics are either passive (e.g. POS, social media, web data, audience engagement) or active (e.g. Survey’s, comments, reviews).

Causal

Casual analytics tries to find a causal link between two variables, cause-and-effect relationships. Casual analytics is not designed to come up with final answers or decisions.

One thing to add here, causal analytics is not about finding a correlation, as both are different.

Causation is one variable producing an effect on the other.

Correlation is the relationship between two variables.

A famous example of the difference is the Storks theory. There was a belief that storks caused fertility when scientists correlated the number of babies being born in houses with storks sitting on chimneys. The correlation was staggering. Conclusion: storks deliver babies!

A more reasonable way to explain that correlation is that people who care about their babies heat their home by putting an extra log in their fireplace. Storks like to build their nest on top chimneys because of the warmth it gives. So the presence of storks is caused by the heat, which is caused by families heating their houses for new babies in the winter.

Before we show what BI covers from the above analytics types, we’ll need to explore the other disciplines of analytic fields:

Business Intelligence (BI) is a comprehensive term encompassing data analytics and other reporting tools that help in decision making using historical data. BI vendors are developing cutting-edge technology tools and technologies to reduce complexities associated with BI and empower the business user.

Business analytics provides analysis as it relates to the overall function and day-to-day operations of a business. It’s process-oriented which involves analysing data and assessing requirements from a business perspective related to an organisation’s overall system.

The output of the business analytics task is an action plan that is under stable by company stakeholders.

Data mining is a systematic and sequential process of identifying and discovering hidden patterns and information in a large dataset, and It is also known as Knowledge Discovery in Databases, through the use of mathematical and computational algorithms, data mining helps to generate new information and unlock the various insights.

The output of the data mining task is a data pattern.

Data analytics is a superset of Data Mining that involves extracting, cleaning, transforming, modelling and visualisation of data with an intention to uncover meaningful and useful information that can help in deriving conclusion and take decisions. Data analytics is about exploring the facts from the data that results in having a specific answer to a specific question, i.e. there is a test hypothesis framework for data analytics.

A business needs various tools to the right data analytics and requires the knowledge of programming languages like Python or R to perform robust data analytics.

The output of data analysis is a verified hypothesis or insight on the data.

Data Science is one of the new fields combining big data, unstructured data and a combination of advanced mathematics and statistics. It is a new field that has emerged within the field of Data Management provides an understanding of the correlation between structured and unstructured data. Nowadays, data scientists are in high demand as they can transform unstructured data into actionable insights, helpful for businesses.

The type of roles that perform the different types of analytics is Business Analysts, Data Analysts or a Data Scientists. The graph below explains what type of analytics by the disciplines and the roles of analysts.

The most common field used alongside a BI tool is Data Analytics, and in most recent BI technologies both go hand in hand.

The significant difference between BI and Data Analytics is that Data Analytics has predictive capabilities whereas BI helps in informed decision-making that is primarily descriptive.

Data analytics, like vitamins, is good for a business, and if done correctly has the potential to increase traction and revenue drastically. Like vitamin though, it’s not a pill that you can pop in your mouth and your done, data analytics require continuous refinement.

BI and Analytics vendors are noticing the shift driven by big data and the need for businesses to combine historical with predictive and machine learning tools. Role of analytics is critical in extracting the relevant information and deriving actionable insight. Analytics is becoming a significant factor in decision making at any insights-driven business. Simple and easy to retrieve reports is a critical functionality required in data analytic tools. The current challenges faced by organisations is integrating the BI systems with the existing system and generate reports that offer actionable insights.

As such a BI tool that allows for smooth and seamless integration between the BI tool and any Data Analytics tool has a significant influence in the BI tool market position and pricing.

In July 2017, Gartner published its annual “hype cycle” graph that describes the status and maturity of all emerging technologies ranging from brain chips to self-driving cars. Notably, the report introduced a new concept called “Augmented Analytics”, which they claimed to be the “future of data analytics”, or simply put "Analytics for Humans"

In the report, Gartner describes Augmented Analytics as:

“an approach that automates insights using machine learning and natural-language generation, marks the next wave of disruption in the data and analytics market.”

To explain what Augmented Reality is, we'll need to understand the problem Augmented Reality is trying to solve.

In the Analytics section, we've explored the different types of analytics done on data, and what types of analytics BI solves, which covers most of the Descriptive Analytics scope. As such, makes BI suitable for Reporting and displaying data. You'll be surprised to learn that Analytics is not usually a function of a BI tool, Analytics has always been a separate process and requires particular languages and applications (e.g. SPSS, R or Python). However, in recent years there is with the explosion of data and an equal demand from companies to manage, explore and analyse data in a holistic view, BI vendors started introducing "some" simple analytics features in their BI tools. Examples of analytics features that layers on top of BI reports are:

Trends

Average lines

Median lines

Median with quartiles

Distribution bands

These analytic models are done for you and require minimal input from the user beyond drag and drop on the BI report, which makes it accessible for nontechnical users to get decent statics from the data in no time.

Bear in mind, that not all BI tools offer these analytics features. Correct analytics process is a different process from BI, so it's not easy for BI vendors to include a full-fledged Analytics process inside the BI application. BI and Analytics use different languages and different way of accessing and processing data (as we'll explore further in this guide). Also, in software development, it takes months of development and testing to release a couple of feature to an application, analytics is not a feature, it's a complete application on its own. So, to expect an established BI tool to become a 2-for-1 app is not practical. It's much easier for a new BI tool to be a 2-for-1 solution because the code of the app is young, and the vendors would have architected the application to accommodate both disciplines from the beginning.

However, analytics is much more than just those simple analytics. Today we require data mining, predictive modelling, machine learning, and AI. Those disciplines follow a complex process of data processing. They also require domain experience, knowledge of statistics and a degree in mathematics.

As an example, let's look at the steps required to perform a simple regression analysis between two data variable, we'll demonstrate the steps used for a Linear Regression. In statistical modelling, regression analysis is a set of statistical processes for estimating the relationships among variables.

Select the sample size. Are you running tests on 10,000 records or 10,000,000 records? Does this sample size represent all of your data variables?

Clean, label and format the data, this probably take most of the analysts times.

Select the set of variables you want to test for a relationship (i.e. the dependent variable and independent variables). For example, if you want to increase the close deals rate, then the close rate would be the primary variable. The rest of the variable are chosen either automatically or manually.

Automatic means the Analytics app goes through every column in every data source and run a regression analysis against account close rate.

Manual means the analyst needs to choose the variables to test, were the right variables picked? (this is where domain expertise comes into play, the better the analyst knows about your business domain the more helpful the analysis is)

Create new variables if the current variables are not enough.

Check the distributions of the variables you intend to use, as well as bivariate relationships among all variables that might go into the model. If the distribution is not satisfactory, go back from the beginning, rinse, repeat.

Run the variable through regression models, you'll need to find the model that satisfies the research your conducting. There are several models to test with:

Linear Regression

Logistic Regression

Polynomial Regression

Stepwise Regression

Ridge Regression

Lasso Regression

ElasticNet Regression

Refine predictors and check model fit. If you are doing a genuinely exploratory analysis, or if the point of the model is a real prediction, you can use some stepwise approach to determine the best predictors. If the analysis is to test hypotheses or answer theoretical research questions, this part will be more about refinement.

Test assumptions, if the right family of models were investigated, variables thoroughly investigated, and the right model was specified, then this step will be about confirming, checking, and refining.

Interpret the final results, communicate those insights with the organisation and convert them into action plans

The above steps goes for every predictive analysis. AI and Machine learning are even more complicated. So as you can see, this type of analysis is best suited to be done by data scientists or data analysts, both of which are scarce and expensive to hire, making it extremely cost-prohibitive for smaller businesses to leverage analytics.

Even with the right person, it takes some time between the business request is made and getting the results and action plans. Data analysts or scientists spend over 80% of their time doing data preparations tasks, from labelling to cleaning the original data sources, as well as doing regression analysis, which is a waste of the analysts time and the investment of the business.(read more here). Therefore, a typical data scientist only analyses a small portion (in some instances an average of 10%) of your data that they think has the most potential of bringing you great insights. Meaning you may miss out on valuable insights in the remaining 90%, Insights that may be mission-critical for your business.

For these reasons, almost all SMBs are still in the early stages of analytics adoption, despite a strong desire to leverage their data.

Those challenges faced by businesses are not going away anytime soon. There won't be a sudden spike of data scientists to solve the talent shortage anytime soon. Neither can you expect that data analysis becomes easier to do for non-technical business people?

That’s where augmented analytics comes in. What augmented analytics does is to relieve a company’s dependence on data scientists by automating insight generation in a company through the use of advanced machine learning and artificial intelligence algorithms.

“The promise is an augmented analytics engine can automatically go through a company’s data, clean it, analyse it, and convert these insights into action steps for the executives or marketers with little to no supervision from a technical person. Augmented analytics, therefore, can make analytics accessible to all SMB owners.”

Augmented Analytics is at its infamy, still an emerging technology. As such, each BI tool having their interpretation and implementation of this technology. Software development and architecture is a lot like building a house. If the house plans are still in the blueprint stage or the foundation's stage, it's easier to make any changes to the house. However, a finished house which requires expansion of the toilet floor space, it's a simple job, as long as foundations stay in place, and the basin stays in place. Ohh you want to move the basin, God helps you.

As such, established BI tools attempts of Augmented reality are nothing short of a patch job, which is done mostly by allowing Analytics tools access to the BI data sources and having the analsys displayed in dashboard alongside other BI reports. In our house analogy, this is a lot like building a garden shed. Other emerging tools are taking a better-integrated approach, Microsoft Power BI integrates Augmented Analytics in two way:

Finding quick insights about your data set, this is nothing more than running correlation analysis on all of your data, most of the results are mediocre (e.g. there is a strong correlation between the day Tuesday and the close rate for deals, so clap your hand twice, stomp your foot and send the proposal on Tuesday)

Finding the drivers for a given result in a report, which opens a report that shows all the drivers for that particular result and segmented into several categories. Why functions are more useful than quick insights as it relates directly to the data.

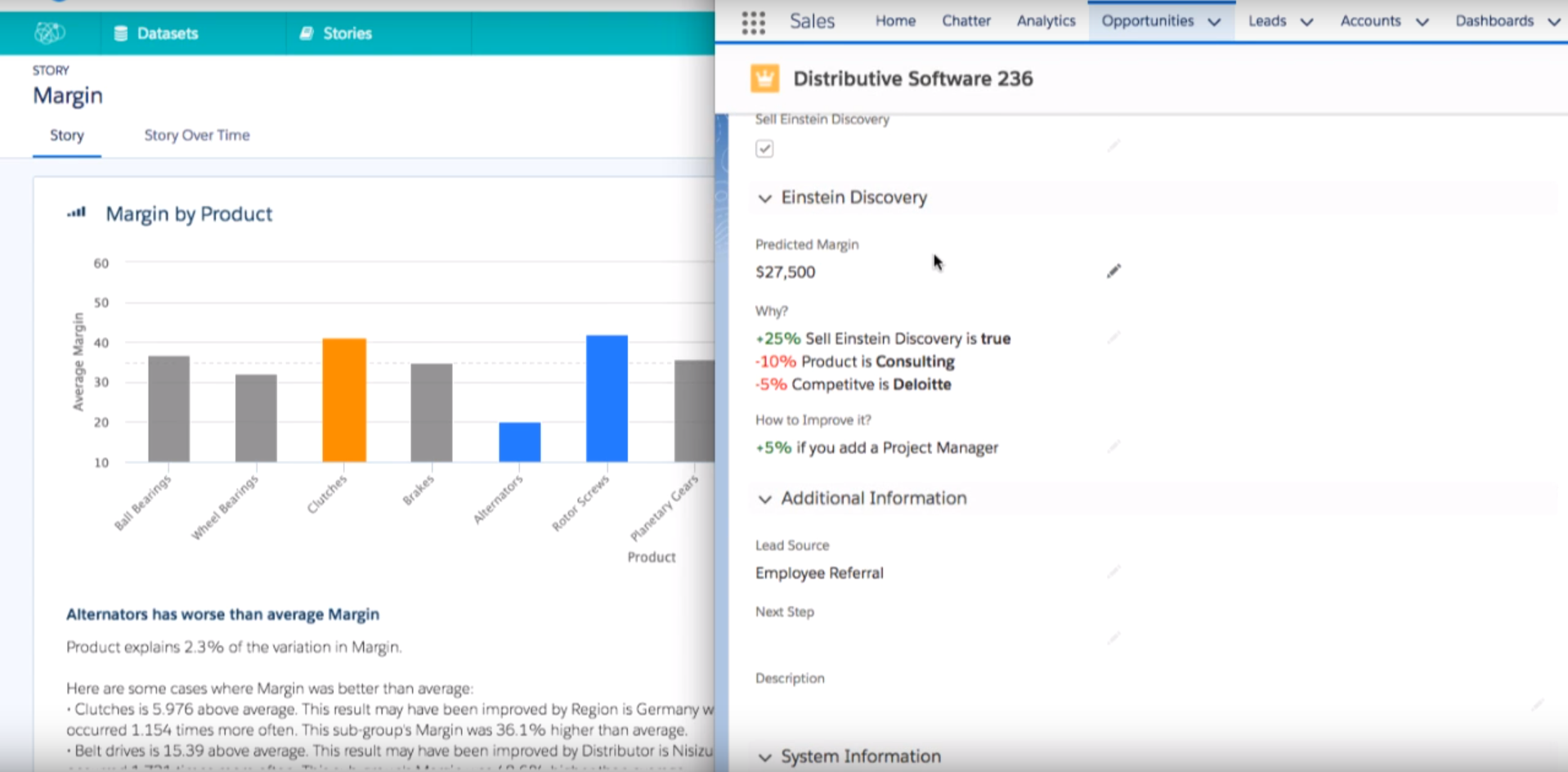

The best implementation we've seen to date for Augmented Analytics is courtesy of Salesforce Einstein Discovery (Salesforce Einstein solution is discussed in greater depth later in this guide)

Salesforce Einstein Discovery is part of Salesforce Analytics solution, and it’s tightly integrated with Salesforce CRM. Which means you’ll need to use Salesforce CRM for Discovery to work.

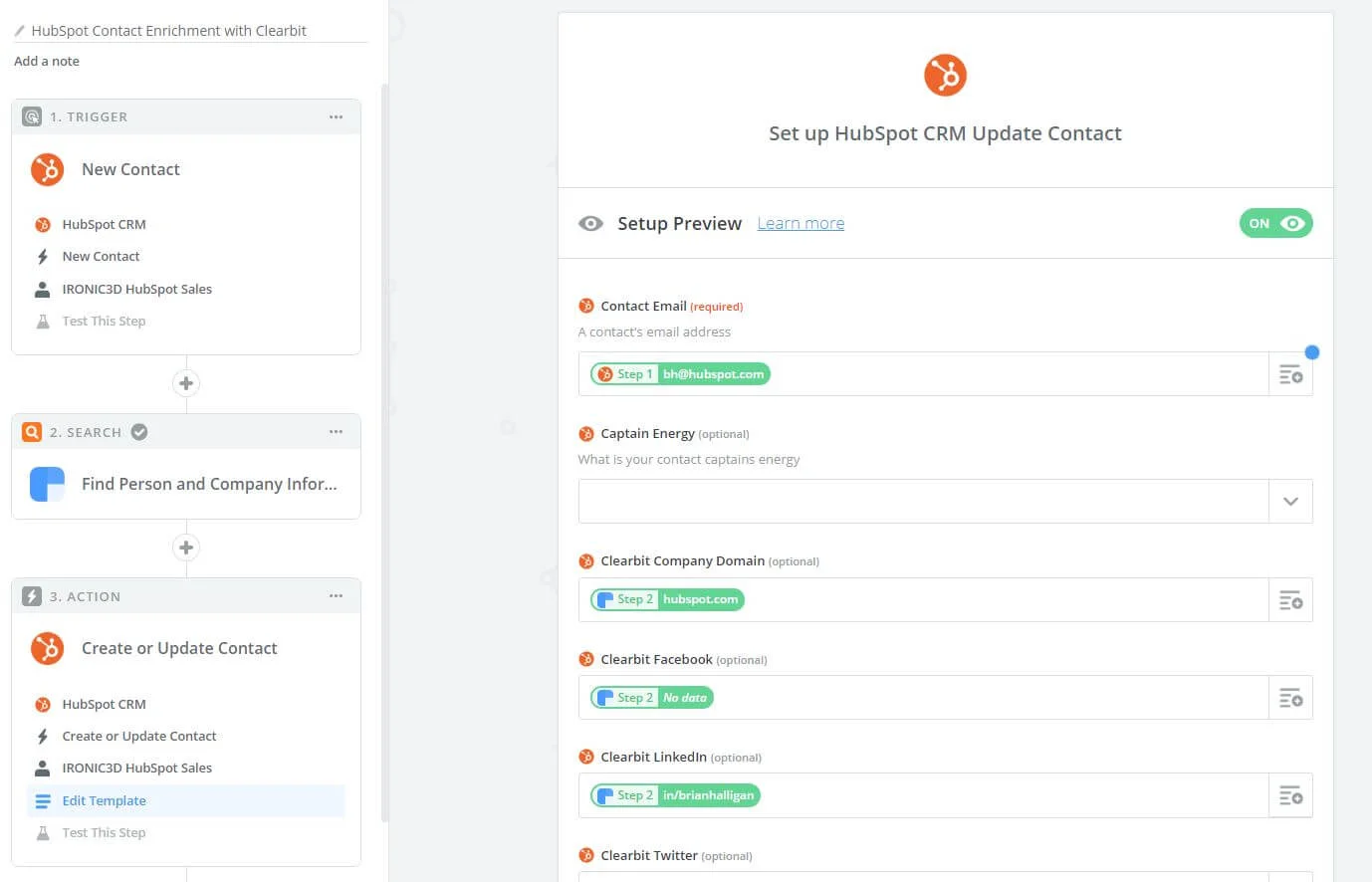

If we were to tackle the same steps required to increase the close rate example, these are the steps required inside of Salesforce Einstein Discovery.

Select the Close Rate as the metric you want to increase.

Pick the data columns for discovery to analyse (Discovery have a powerful Natural Language Processing (NLP) engine so that it can analyse written notes as well)

Discovery takes 75% of this data and runs regression analysis on them

Discovery uses the remaining 25% to test the predictive models against the 75% data

Start the analysis

Resulting data graphs along with actions plans are given to the user.

This processes usually takes anywhere from 5-10 minutes.

Up to this point, Discovery presents the results like any other BI and Analytics integration (albeit with more intelligence and with an action plan), providing analysis on a separate report. Discovery takes a fraction of the time than other solution, and yes the analysis is performed by any users, but what makes Discovery stand out from other implementation is what happens after that last step, which puts insight into context.

Besides the stand-alone report, all the action plans to increase the close deal rate sits in the sales rep deals page. Discovery embeds itself in the side and provides the sales rep with a probability of closing this deal and what are the recommended steps to increase that probability to win the account (taking into account the sales rep data, customer data, similar industry trends…etc). Each time the sales rep executes on the recommended list the probability increases.

You can combine multiple insights into the same report. There isn't any limitation to the number of insights (to increase or decrease a metric) you want to create, so, for instance, you might want to get insight on how to close a deal faster as well. Same steps as above, and now the sales rep have two insights that help in winning the deal and closing it faster.

A nontechnical person performs this analysis in the manner of minutes. Not only does Discovery provide unparalleled ease of access and use to predictive modelling, but it also cuts down costs significantly to the business as the need for an in-house analyst are not required.

In this chapter, we’ll take a look at some real-world examples of BI implementation in small and medium businesses. We’ll bring examples from two business. A small company in the health and fitness space, the challenges they faced operating in the digital space, and how BI helped them. The other example is from a more established business, how BI was implemented to solve a business process to allow for growth without the need to hire extra team members.

The examples provide a complete background story along with the business challenges, the logic of the solution and the outcome. Providing this level of information helps you in understanding the context on why and how the solution helped the business.

- Ch3.1 Performance Marketing

- Ch3.2 Process Optimisations

Background:

This first use case explores a health and fitness company selling on-premise accredited courses and online training models. The company have been operating for 9 years in Sydney. The core business team is small, and most have been with the business from the start, the company had various contractors throughout the year as well. In the beginning, most of the marketing and sales were through events, council promotions, exhibitions and health and fitness publication. The company have transitioned to digital marketing almost entirely 4 years back.

The business revenue was a little shy of $400,000 in the last financial year. The Marketing cost for the same year was $90,000, while the remaining revenue spent on fixed costs and wages, with no room for profit.

Challenges:

The company was facing several challenges that kept on growing year after year, mostly because of the shift in the digital landscape and the team couldn’t cope with the massive changes. The main challenges were the following:

Burdon of technology. The company have been picking up tools to operate the business as needed. Those tools were spiralling out of control, which meant if a team member or a contractor introduced a tool, no other member of the team could pick up the tasks if that person were not present. It also meant most tools needed an outsource contractors to maintain and updates.

Tools fragmentation. With as many used tools, fragmentation is always present. Records stored on multiple spreadsheets and system (locally, DropBox or Google Drive). Multiple payment gateways, multiple booking systems, various marketing and sales tools or sheets. There was little or no integration between any of the tools. This type of fragmentation is of a massive cost to any business.

Client support and servicing. Fragmentation first casualty is usually client support. It takes longer to edit or find records, simple tasks that require minutes ends up taking an hour or more. Data duplication is error-prone and usually not updated (customer activities need to be collected by hand from one tool to another). The result of all of it is an overwhelmed team member that keeps on dropping the ball with their customers.

Visibility. The business had no visibility on how the business was operating beyond the financial spreadsheets from the bank feeds and accounting solution. There is nothing to measure, or improved upon because of the lack of data. Even if all the tools they’ve been using did provide them with a wealth of data, they didn’t know how to use them. For instance, they couldn’t tell a customer who purchased a course was a result of a marketing campaign they performed or the originating channel.

Growth. The company didn't have any growth. Their year on year revenue was shrinking by 30% on average, year over year.

Solution:

Business Intelligence was pitched as part of a five months Digital Transformation project with an investment of $50,000. The project addressed three main areas for the business:

Systemisation:

Consolidating all the business tools into one core business tool (CRM implementation that included sales records, customer records, custom objects for events and training, booking, online payment gateway, campaigns and automated marketing).

Guided systemised business processes, which allowed any team member to pick a task with clear outcomes and follow up tasks.

Unifying the company websites, migrating to a modular growth-based design that can be updated and built by the existing team members.

Automation:

Integrating all cloud-based tools which feed information to a central CRM system. Automation relieves human data migration between application, usually done by a human and results in data entry errors.

Automating client inquiries, follow up emails, collection email, customer onboarding emails.

Reports and records automation for events and courses.

Analytics:

Setting baseline measure for all the business activities and KPIs. If you cannot measure it, then you cannot improve it.

Providing a complete tracking workflow for customer journeys (Marketing and Sales).

Business Intelligence to provide the business with a complete insight on the business operation and future decision making.

BI is an integral part of growth and the success of the project. As such, we’ve asked the marketing, sales and delivery team member to take a behavioural profiling audit.

Most larger organisation are familiar with behavioural profiling practices and actively exercise them for recruits, for smaller businesses though, this might seem like an odd step to make. The truth is, there is an application for every problem, however, if no one in the business is going to use this application or solution, then nothing changes for the company’s bottom line. Tools don’t operate on their own. They need a user to operate and make the most out of them. When a medium or a large company introduces change, there is little or no resistant amongst the employees, because the business at that point have processes already in place, and every team member knows their tasks and how to perform them with ease. If there is a resistance to change it usually sits with upper management and senior executives.

Smaller companies and start-ups are different in that the team members are usually multi-disciplinary, they perform more than one task (one person does marketing: blogging, copywriting, EDMs, paid marketing, social marketing), and they are closer to the business founders. In a nutshell, they operate as a family. If there is a resistant to change, then the effect on the business is enormous.

Behavioural profiling gives us three critical insights in implementing a solution:

How the team operates

Who’s going to resist changes

How to introduce the changes

Analytics and BI is a left-brain process, it’s logical, analytical and objective. Right-brain, on the other hand, is emotional, imaginative and subjective. All of the team members were an S and an I, which meant they are kinesthetic, emotional, creative, visual learners, they seek togetherness, community and most importantly they don’t want to be bound by numbers to operate. BI is the complete opposite of all of those things. Our early tests proved a high resistance to change and in some cases refusal of the solution altogether.

Outcome:

A three months tracking and analytics audit proved that all sales were not directly generated by marketing. Paid advertising (40% of the marketing budget) did not generate any customers. EDM (60% of the marketing) had little influence on the buying decision. 90% of customers were new leads generated from organic search results. All paid media advertising was suspended and directed to nurture organic leads and website visitors, based on visitor data.

BI gave management real-time insight on how the business operates and the decision needed. By breaking down the results into their drivers, they were able to communicate the required goal and benchmarks to the team. The directors of the business were able to keep track of goal completions. The team still doesn’t access the BI solution but are happy to receive simple guided goals and measure for each of their tasks.

The business reached their previous year revenue in the first three months of the project. The remaining two months of the project the company went over by 60%.

Background:

The second use case explores a Media Agency that manages performance campaign for brands. The agency is a start-up with a $13,000,000 yearly revenue. Their growth was 20% year on year. However, as many start-ups with a growth phase there was no profit. The agency had offices in several cities and a relatively average team of 40, which included sales, production, IT, media buying, business analysts, finance and management.

The agency wanted a BI solution that would help in process optimisations to reduce operational costs, generating new products and increasing profit margins.

Challenges:

One of the main challenges of the business was on the reporting side of things. The business wanted a streamlined version to prepare reports for their clients. Their early attempts included building a bespoke booking system which wasn’t successful in fixing the problem.

Sales would acquire an account to run a digital paid campaigns for a brand. The campaign then gets passed to the media buying team that have three main areas of responsibilities:

Setting up the campaign on all partner channels and traffic the campaign line items.

Monitor and optimise the campaign performance based on the campaign metrics.

Report on the campaign performance to the sales team, the client and the finance team.

The media buying team consisted of 7 team member, and the agency was actively recruiting more positions for this role. On average the agency was trafficking 150 campaigns per month. An example of a campaign is Coca-Cola Summer Fun. A campaign has 7-8 targeting criteria on average. An example of targeting criteria is male between the ages of 18-24 living in metropolitan Sydney. Each targeting criteria is made up of an average of 6 different creatives. An example of creatives is Video, Interstitial, banner or an mrec. So a media buying team member needs to set up 48 creatives on average for each campaign, monitor and optimise each one daily. More so, a single campaign runs across 3 different channels on average. Meaning a single setup is 144 creatives. The agency had 44 different channels.

When it comes to reporting, a media buying team member needs to visit every channel, download individual reports for each creative, target and campaign. Combine them in a spreadsheet. Format and clean. Then send to the client, salesperson or finance team. The media buying team spent 80%-90% of their time on reporting. With little to no room for optimising. Also, requests made by the sales team to check campaign performance were not met on time or completely ignored because of the workload. Also, the biggest department that was suffering (besides the media buying team who usually worked 12 hours per day) because of this manual process where the finance team. The agency sends their end of month invoices at the beginning of every month. To generate those invoices five things needs to happen:

Collect all trafficked creatives and lines items from all channels for a campaign in this month. Since the finance team doesn’t know anything about advertising and media channels, the media buying team would need to generate those final reports, which is always late.

Name each campaign and targets with the insertion order name and campaign prefixes.

Check each delivered target metrics against the insertion order. Since insertion orders cover the whole span of the campaign and not a single month, the finance team needs to split the period manually and determine the required delivery for each month, compare it to the delivered for that month, check older invoices and the remaining before generating each line,

Compile a report and send to each sales person to double their the delivery and IO numbers.

Generate an invoice for each campaign which breaks the targets as items in the invoice.

The processes to generate an end of month billing for a given month was a 2 months process.

Solution:

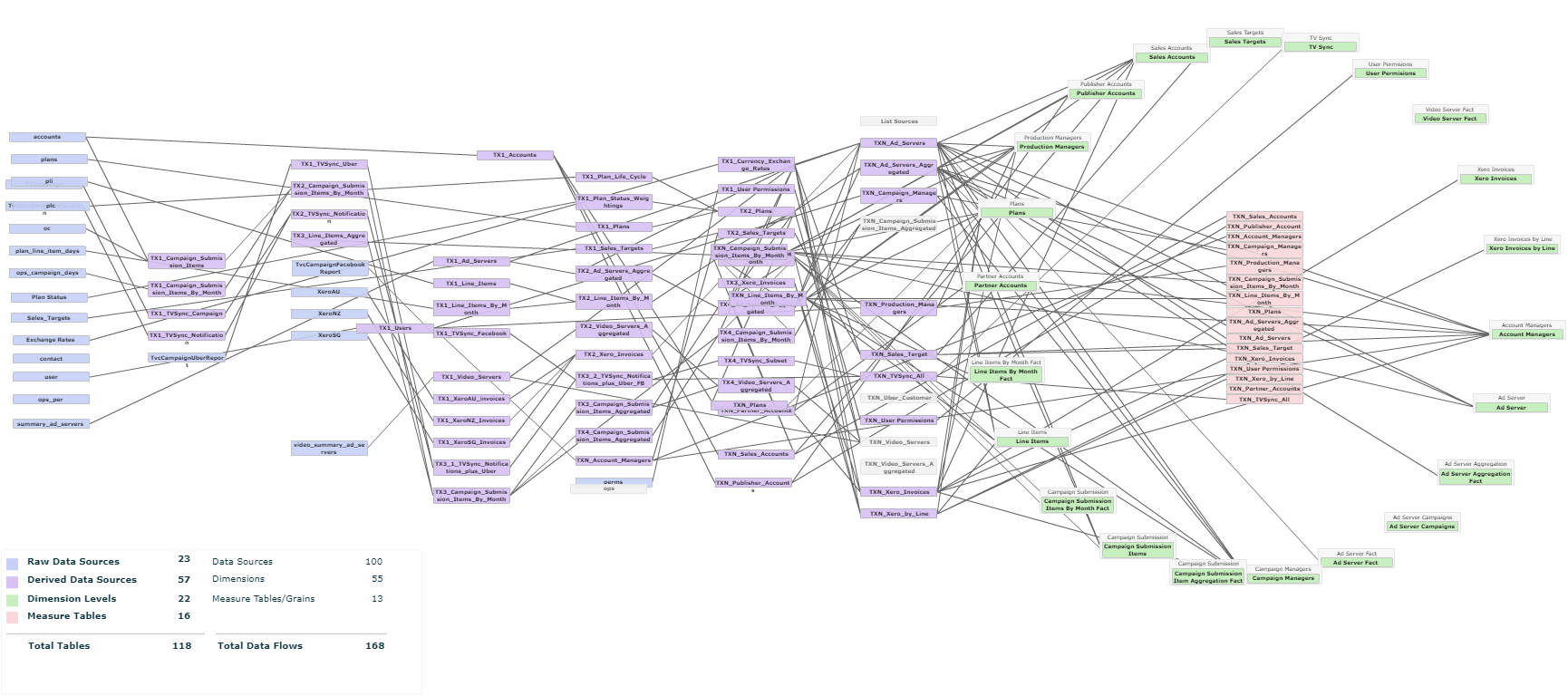

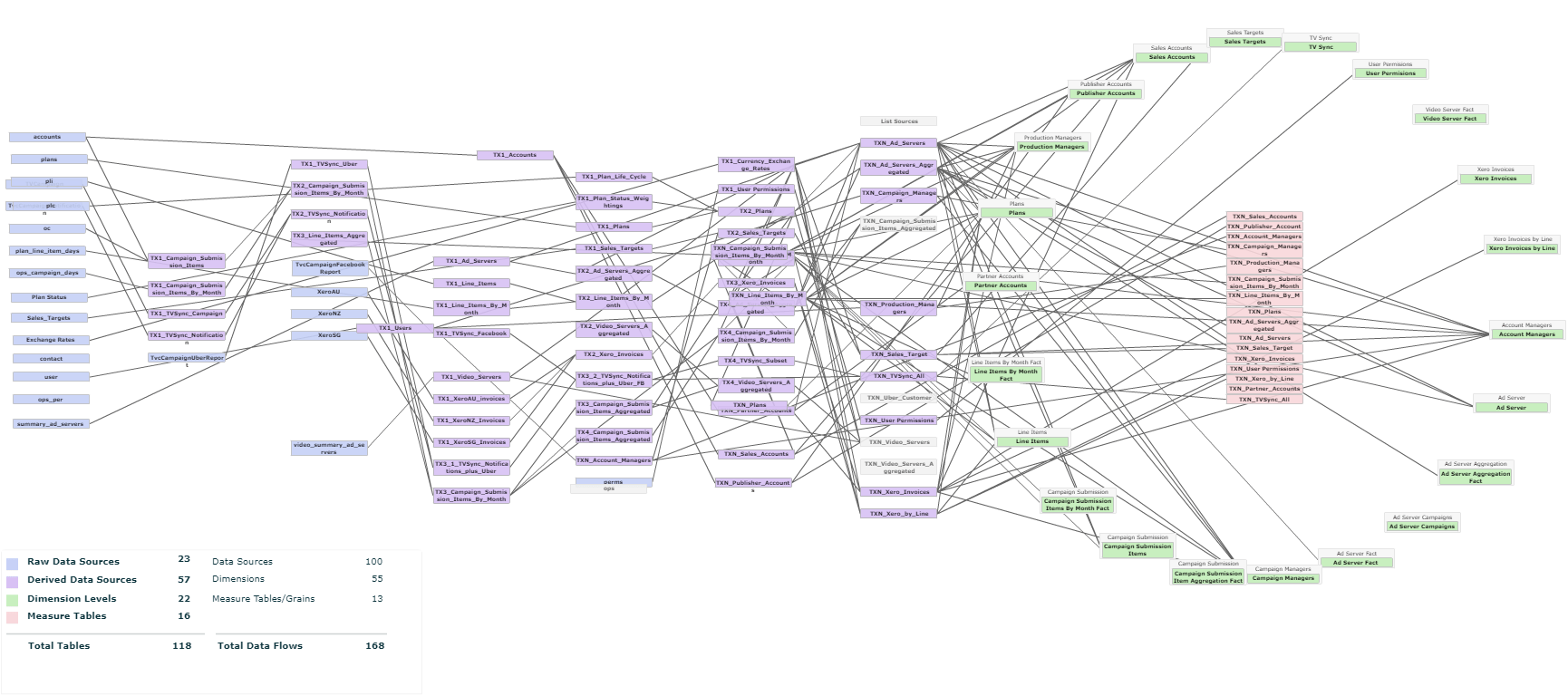

BI is a perfect solution for such processes. Collecting internal operating data and external operating data and report on them is at the heart of what a BI tool offers. The BI solution took 8 months to implement, which involved massive back-end development and implementation for all the different data sources, as well as the modelling of the data and reports generations.

The resulting BI was collecting data from the business CRM, booking system, 44 advertising channels, and accounting software. Drillthrough dashboards created for every department and even individual roles in the business. Data was refreshed daily and reported on a daily basis.

Outcome:

Reporting is done automatically and freed up 80% of the media buying team. In fact reporting happens by one of the following ways:

A client can subscribe to a specific report that is delivered to them daily, weekly or monthly. Formatted and cleaned.

A media buying team can subscribe a client to a specific report.

Creation of scheduled reports involves a few steps. Delivered to a client destination or a BI tool.

Media buying team can generate a new report to match client needs with a few simple steps.

Sales have access to all campaign reports and can pull all relevant data with a few clicks.

Finance team have access to accurate, segmented, calculated, formatted, checked and paced reports at any given time.

Today, the end of month billing processes takes one day, as opposed to the 2 months old process.

Ch4. BI Components

The Back-End OF a BI Solution

There is a great deal of effort that goes in implementing a BI solution for any business. Most of that effort doesn't sit with the BI tool itself. Instead, it relates to the back-end requirements for the implementation. This effort is in contrast to what BI tools publicise of a plug and play solution. In a perfect world, a plug-and-play solution is possible, for the real world though, BI implementation goes through several back-end stages before generating any reports or insights. Depending on the tools used in the business and the insight requirements, these back-end stages can take anywhere from a few weeks to 6 months or more. Aside from the additional time, back-end stages have the potential to add tens of thousands of dollars to the implementation budget.

This chapter explores all the back-end implementation stages required for a successful and useful BI solution for a business.

- Ch4.1 Data Sources

- Ch4.2 ETL

- Ch4.4 Connectors

- Ch4.3 Data Warehouse

- Ch4.5 Data Preparation

Defining Data sources is a planning step which happens early in the implementation phase. This step focuses on identifying 2 areas:

Listing all data sources and defining hierarchies and relationships between them

Identifying how data exporting options from each data source

1. Listing and Identifying Keys

The first objective of this planning phase is to to make a list of all of the data sources you want to pull information from, group them between Internal and External data sources. Most often than not those data sources are discreet with no connection or relationship between them.

Relationships and references between data sources is an essential step of a successful BI implementation. Having arbitrary and discreet data sources without any relationship results in broken reports. The way to perform relationship between data is through identifying the Primary Keys and Foreign Keys in each of the data sources used in the BI tool.

The primary key consists of one or more columns whose data contained within are used to identify each row in the table uniquely. You can think of the primary key as an address. If the rows in a table were mailboxes, then the primary key would be the listing of street addresses. When a primary key is composed of multiple columns, the data from each column is used to determine whether a row is unique.

A foreign key is a set of one or more columns in a table that refers to the primary key in another table. There aren’t any unique code, configurations, or table definitions you need to place to officially “designate” a foreign key.

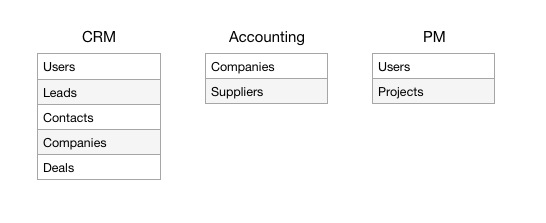

As an example, assume you have the following sources of data in your business:

CRM system that manages all the business and sales operations. You have company users, contacts, organisations, and opportunities.

An accounting software which includes records for companies and suppliers with all transactional data.

A project management software with company users and projects

If one of the questions a BI tool should provide an answer to is: How much was invoiced to contact X in the last 6 months?

The first issue we have is that the accounting system doesn’t include any contact information, it only stores company details. On the other hand, the CRM have both contacts and companies. So how can we relate contacts to invoices?

To do so, we would need to identify companies in both applications and match them together through a unique identifier, in this case email (a company email) is the identifier.

Using a company name as an identifier is a bad idea since text can be different between systems and it’s not reliable.

Internally the CRM system would have created a relationship between the contact and a company by pointing a company to the unique ID of each contact in the system. In this case, ID is a primary key, and the companies record includes a reference to all those IDs through the hidden contact_id. This relationship is already in place and is provided to you by the CRM, so when you export the data from the CRM for the contacts and companies, those fields are present in each table.

For the accounting system though there isn’t a direct integration between both platforms, meaning we’ll have to plan which fields are the primary or the foreign keys. The email field in the CRM system can be considered the primary key, since it’s unique for each company, while the email field in the accounting system is treated as the foreign key later in the modelling phase.

The actual process a BI processes this query might be something like:

Find the contact details by name and select the contact ID

Select the company by contact_id AND select the company email address

Select company details from accounting application by company email address

Select the invoice amount for the company filtered by the duration requested

Display result of contact with invoice amount.

This process highlights the first issue and the primary purpose of listing and identifying keys between the data sources, we need to make sure the fields we designate as keys need to match between systems. If the CRM company email field is info@acme.com while the accounting system has the email field set to finance@acme.com, then when trying to perform any matching in the BI tool won't return any result.

Another example would be to generate a tabular report that lists all projects currently active, alongside their respective companies. Looking back at our diagram we’ll notice that the Project Management application doesn’t include any information about a company, the name of the company might be referenced in the project name somehow, but that’s not enough to make sure that a relationship can be made based on the name alone

As explained previously, text strings are not suitable for identifications, that’s because the text is user-generated. User-generated fields exhibit many issues, from typo errors to naming mismatches. The addition of a single space in the name might break the matching between names, lowercase and uppercase letters break the match. This type of mismatch often occurs between applications, especially in the absence of a strict naming convention policy for field naming.

In the case of a mismatch in our example, several solutions can be implemented to solve this issue.

One solution would be to create programming scripts that perform string manipulation on text strings upon exporting, however, this is time-consuming to implement in most cases.

A better option is to create custom fields in the PM application that allows for the manual input of foreign keys in the application (e.g. adding a company name field and a company email field in the project information records). This approach is the most common, the implications though is a fair amount of labour work to perform to update all previous projects manually.

Another solution would be in automating the creation of a project from the CRM application into the PM application through the use of API scripts or API helpers. This approach ensures that data from the CRM is used to populate the fields in the PM application without the human intervention (which is prone to errors). How easy is it to implement this solution depends on the availability of API integration options between the data sources currently used as well as the technical resources available in the company.

In all cases, having a strict naming convention policy in a company for field names would make this process much more manageable in the long run.

2. Data Exporting

The second objective of this phase is to understand how each data source exports the data. Here are the main questions we’re trying to answer:

Can we extract the data through a spreadsheet?

Is there an API to extract the data from each data source?

Which applications provide API connectivity and which applications provide a spreadsheet export?

How often do we need to extract data?

Is the extraction done manually or automatically?

Can we schedule exports?

How frequent?

What tables can each application export?

Having a clear answer to all of the above questions in advance indicates how involved will the next step of the implementation be, and how much-required resources (internal or external) needed to export the data, which in turn impacts the BI solution implementation cost.

The data sources planning phase is generally overlooked by some companies, as most BI implementation begins by picking a BI tool first and figure out the rest of requirements from there. This approach is costly and not recommended as it inflates implementation and running costs considerably. Planning and identifying issues early in the processes ensure a smooth implementation and tighter control of the budget and time to implement.

For some companies, the planning phase helps in identifying significant shortcomings in their current applications currently used. It is not uncommon for a company to decide on switching the core business applications at this stage in favour of better integration with the BI solution. This step might even prove to be the most cost-effective than trying to solve the shortcomings by going through a development project. In either case, it's better to map all of the issues beforehand to mitigate budget and time inflation risk.

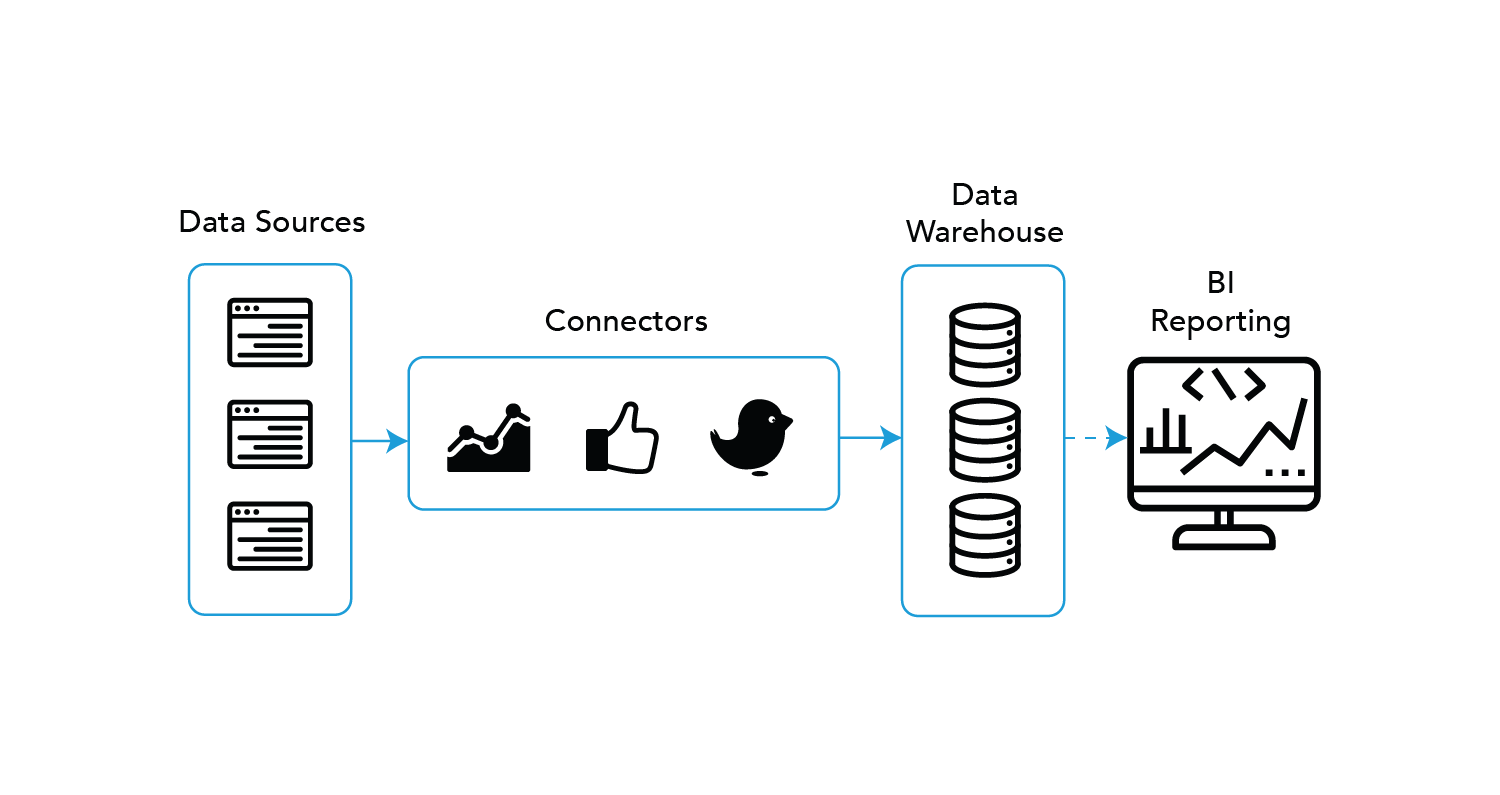

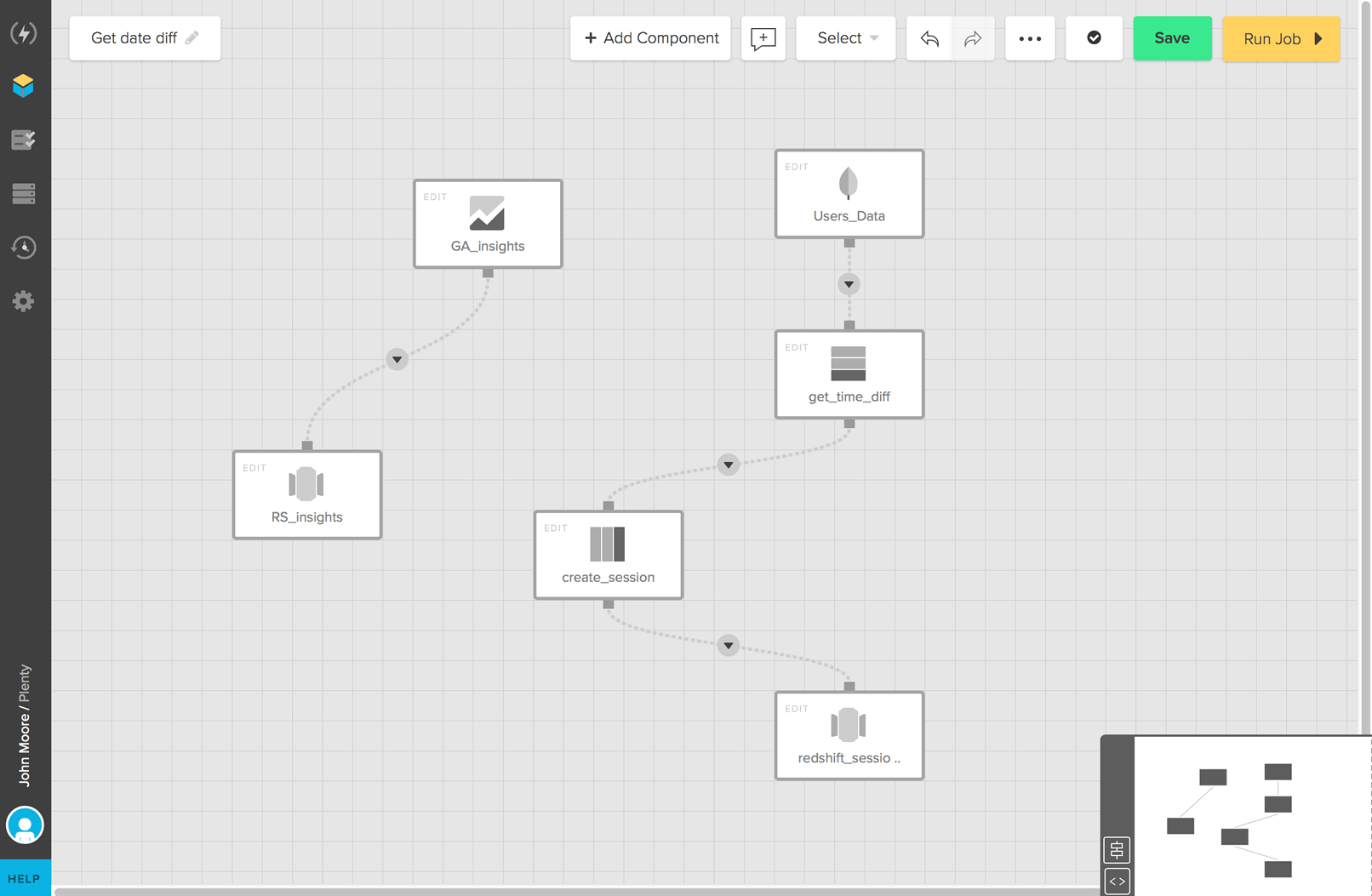

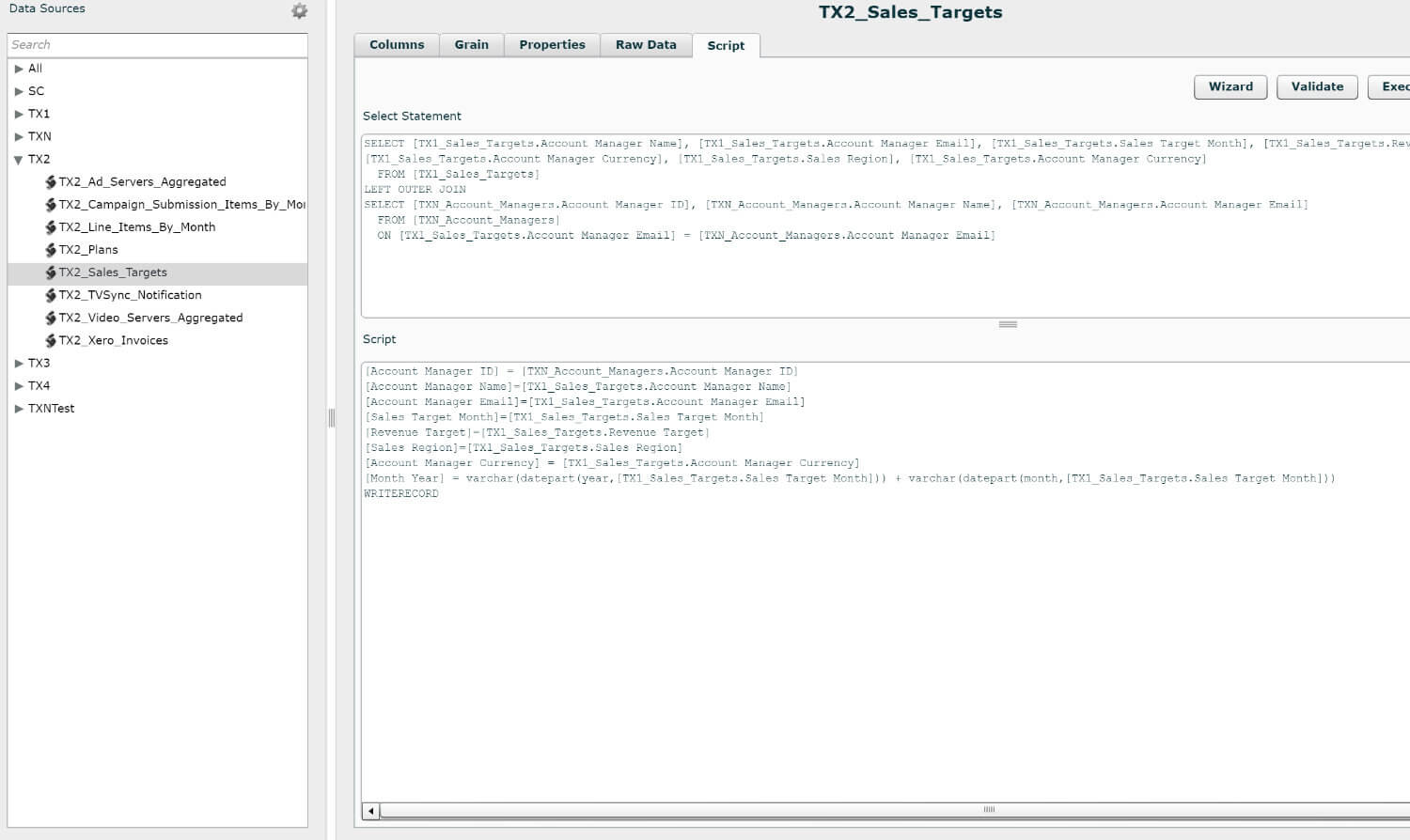

The ETL stage is an implementation phase that deals with writing scripts that perform extraction of data records from all sources, identified from the Data Sources stage, and perform various transformative manipulation on the underlying data and loads the result on a Data Warehouse. From there the Data Warehouse will either allow further manipulation on the data through the Data Preparation stage or load them directly onto the BI Tool to start the modelling and reports generation process.

ETL is performed and implemented entirely by an IT department, either in-house or through an outsource agency. ETL stage takes a considerable amount of time to implement and can add a significant additional cost to the BI solution budget, as the choices made at this ETL stage have a significant impact on the final pricing of the BI tool you choose.

So what is an ETL layer?

Extract